News

2024/08/09 3D-MuPPET accepted @GCPR2024 (Nectar Track).

2024/07/04 uni'kon features this work in #79 Freiheit, pp. 24-27. [German version]

2024/05/27 The University of Konstanz reports in a press release about this work. [English version]

2024/03/28 Paper accepted at the International Journal of Computer Vision. [paper]

2024/01/03 Preprint updated, code updated, datasets and weights uploaded! [Image Dataset, Wild-MuPPET Dataset, Weights]

2023/10/13 We have officially launched our project site, and launched our hugging face demo for 2D posture estimation of pigeons in any environments. [Hungging Face]

Abstract

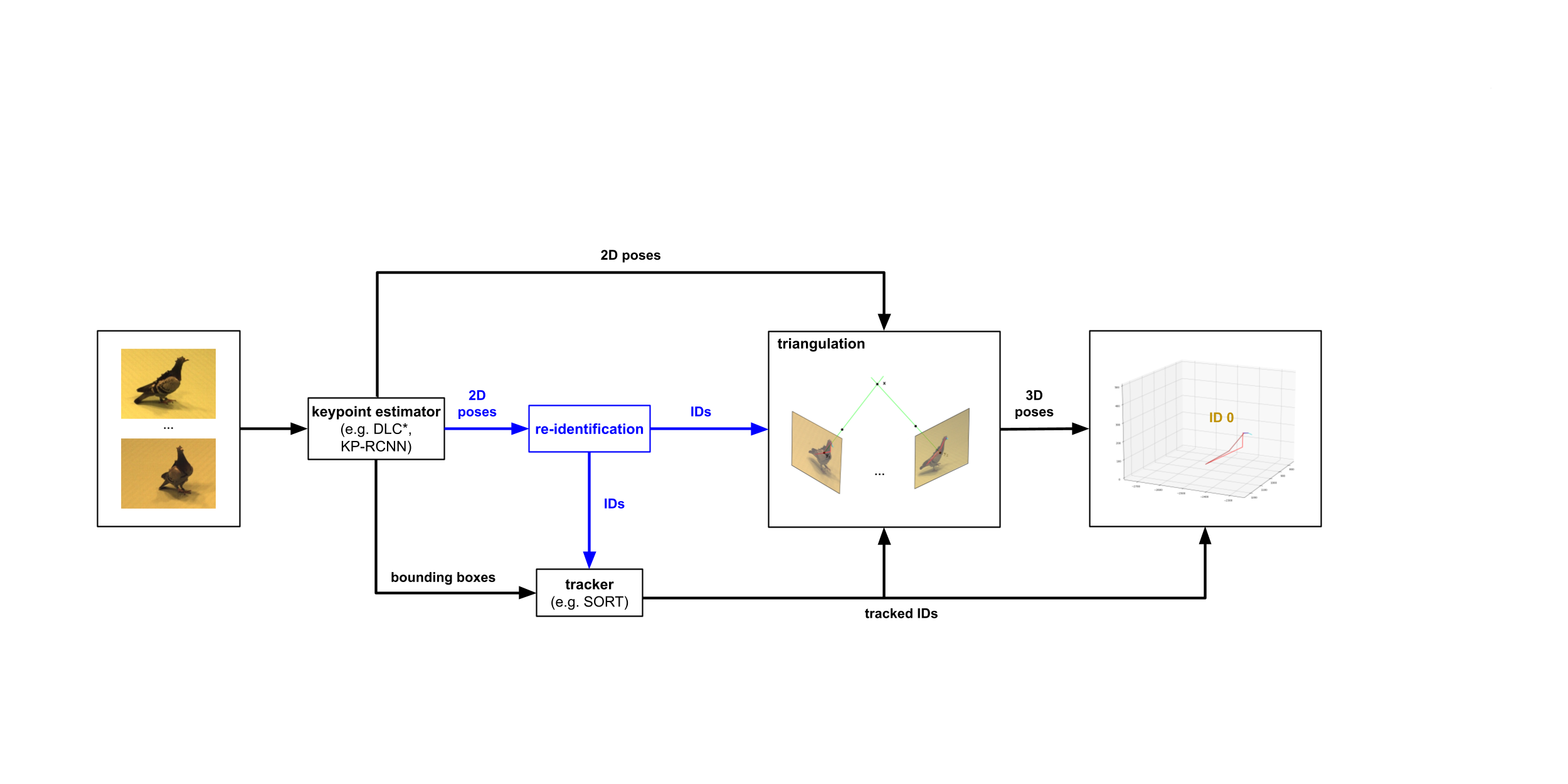

Markerless methods for animal posture tracking have been rapidly developing recently, but frameworks and benchmarks for tracking large animal groups in 3D are still lacking. To overcome this gap in the literature, we present 3D-MuPPET, a framework to estimate and track 3D poses of up to 10 pigeons at interactive speed using multiple camera views. We train a pose estimator to infer 2D keypoints and bounding boxes of multiple pigeons, then triangulate the keypoints to 3D. For identity matching of individuals in all views, we first dynamically match 2D detections to global identities in the first frame, then use a 2D tracker to maintain IDs across views in subsequent frames. We achieve comparable accuracy to a state of the art 3D pose estimator in terms of median error and Percentage of Correct Keypoints. Additionally, we benchmark the inference speed of 3D-MuPPET, with up to 9.45 fps in 2D and 1.89 fps in 3D, and perform quantitative tracking evaluation, which yields encouraging results. Finally, we showcase two novel applications for 3D-MuPPET. First, we train a model with data of single pigeons and achieve comparable results in 2D and 3D posture estimation for up to 5 pigeons. Second, we show that 3D-MuPPET also works in outdoors without additional annotations from natural environments. Both use cases simplify the domain shift to new species and environments, largely reducing annotation effort needed for 3D posture tracking. To the best of our knowledge we are the first to present a framework for 2D/3D animal posture and trajectory tracking that works in both indoor and outdoor environments for up to 10 individuals. We hope that the framework can open up new opportunities in studying animal collective behaviour and encourages further developments in 3D multi animal posture tracking.

Additional Results

3D Pose Estimation and Tracking of Multiple Pigeons in Captive Environments

Using the 3D-POP dataset, we trained 2D keypoint detection models, then triangulated postures into 3D. We also show that models trained on single pigeon data also work well with multi-pigeon data.

This video shows 3D keypoints from triangulation, reprojected to a single camera view.

This video shows 3D pose estimations of 10 foraging pigeons.

Pigeons in outdoor environments. Using the segment anything model, we trained a 2D keypoint detector with masked pigeons from captive data, then applied the model to pigeon videos outdoors for 3D tracking in the wild.

Cite us

@article{waldchan20243d,

title={3D-MuPPET: 3D Multi-Pigeon Pose Estimation and Tracking},

author={Waldmann, Urs and Chan, Alex Hoi Hang and Naik, Hemal and Nagy, M{\'a}t{\'e} and Couzin, Iain D and Deussen, Oliver and Goldluecke, Bastian and Kano, Fumihiro},

journal={International Journal of Computer Vision},

year={2024}

}